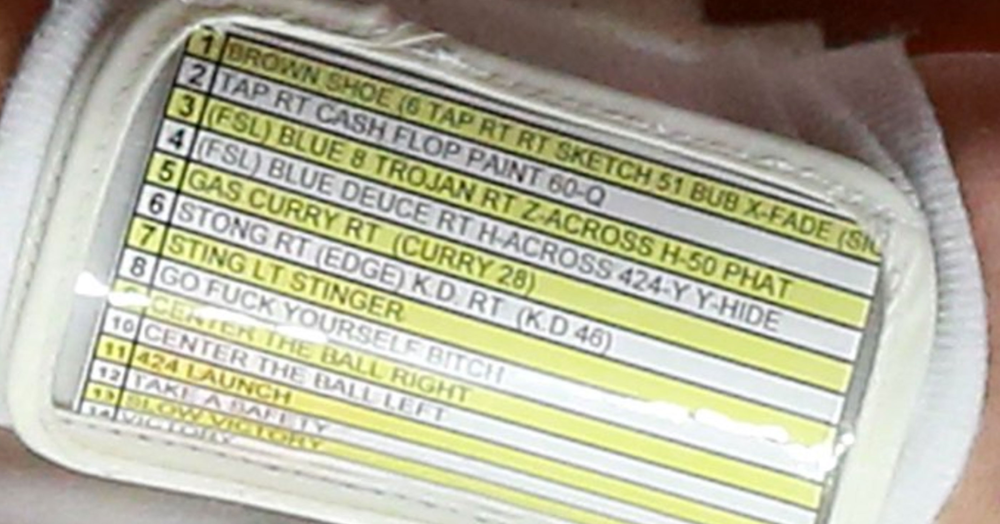

Football ...

Basketball ...

Baseball ...

Other Sports ...

Futbol ...

🤫995🤫 ...

Gambling ...

Movies & TV ...

Music ...

Hobbies ...

Lulz ...

Food & Travel

...

Daily Texan ...

Business & Markets ...

Cloak Room ...

Help ...

For Sale ...

Board Discussion ...

Advertise...

Tailgate Donations

-

Posts

856 -

Joined

-

Last visited